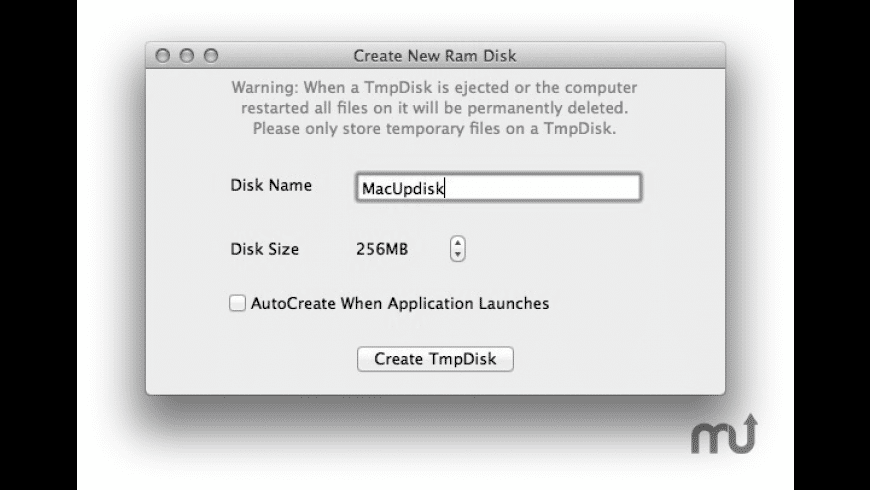

Go over RAM disks and a software program to create it for your MAC. TMPdisk This will create a 250MB ramdisk, you can change the size by chaning the “512000” value. The size of the disk is based on the number of 512 byte sectors. That means the size in bytes has to be divided by 512. For example to calculate the size parameter of a volume with 4GB, the following formula is used. Slurm is an open source, fault-tolerant, and highly scalable cluster management and job scheduling system for large and small Linux clusters. NodeName=c1 Arch=x8664 CoresPerSocket=8 CPUAlloc=0 CPUErr=0 CPUTot=32 CPULoad=0.01 AvailableFeatures=(null) ActiveFeatures=(null) Gres=(null) NodeAddr=c1 NodeHostName=c1 Version=17.02 OS=Linux RealMemory=1 AllocMem=0 FreeMem=1138 Sockets=2 Boards=1 State=IDLE+DRAIN ThreadsPerCore=2 TmpDisk=0 Weight=1 Owner=N/A MCSlabel=N/A Partitions=normal BootTime=2018-10-29T08:24:18 SlurmdStartTime=2018. TmpDisk:: Open Source RAM disk management app. Ultra RAM Disk installs as a menu bar item that allows you to create RAM disks when needed. RAMDisk: is an app for creating as well as backing up RAM disks, to allow you to save their contents as well as restore RAM disks when you restart your Mac.

- Installing on VMware vSphere

- Installing ManageIQ

- Configuring ManageIQ

- Configuring a Database

- Additional Configuration for Appliances on VMware vSphere

- Logging In After Installing ManageIQ

- Appliance Console Command-Line Interface (CLI)

Installing ManageIQ

Installing ManageIQ consists of the following steps:

Downloading the appliance for your environment as a virtual machineimage template.

Setting up a virtual machine based on the appliance.

Configuring the ManageIQ appliance.

After you have completed all the procedures in this guide, you will havea working environment on which additional customizations andconfigurations can be performed.

Obtaining the appliance

Uploading the Appliance on VMware vSphere

Uploading the ManageIQ appliance file onto VMware vSphere systemshas the following requirements:

44 GB of space on the chosen vSphere datastore.

12 GB RAM.

4 VCPUs.

Administrator access to the vSphere Client.

Depending on your infrastructure, allow time for the upload.

Note:

These are the procedural steps as of the time of writing. For moreinformation, consult the VMware documentation.

Use the following procedure to upload the ManageIQ appliance OVF template from your local file system using the vSphere Client.

In the vSphere Client, select menu:File[Deploy OVF Template]. TheDeploy OVF Template wizard appears.

Specify the source location and click Next.

Select Deploy from File to browse your file system for theOVF template, for example

manageiq-vsphere-ivanchuk-4.ova.Select Deploy from URL to specify a URL to an OVF templatelocated on the internet.

View the OVF Template Details page and click Next.

Select the deployment configuration from the drop-down menu andclick Next. The option selected typically controls the memorysettings, number of CPUs and reservations, and application-levelconfiguration parameters.

Select the host or cluster on which you want to deploy the OVFtemplate and click Next.

Select the host on which you want to run the run the ManageIQappliance, and click Next.

Navigate to, and select the resource pool where you want to run theManageIQ appliance and click Next.

Select a datastore to store the deployed ManageIQ appliance,and click Next. Ensure to select a datastore large enough toaccommodate the virtual machine and all of its virtual disk files.

Select the disk format to store the virtual machine virtual disks,and click Next.

Select Thin Provisioned if the storage is allocated ondemand as data is written to the virtual disks.

Select Thick Provisioned if all storage is immediatelyallocated.

For each network specified in the OVF template, select a network byright-clicking the Destination Network column in yourinfrastructure to set up the network mapping and click Next.

The IP Allocation page does not require any configurationchanges. Leave the default settings in the IP Allocation pageand click Next.

Set the user-configurable properties and click Next. Theproperties to enter depend on the selected IP allocation scheme. Forexample, you are prompted for IP related information for thedeployed virtual machines only in the case of a fixed IP allocationscheme.

Review your settings and click Finish.

The progress of the import task appears in the vSphere Client Statuspanel.

Configuring ManageIQ

After installing ManageIQ and running it for the firsttime, you must perform some basic configuration. To configureManageIQ, you must at a minimum:

Add a disk to the infrastructure hosting your appliance.

Configure the database.

Configure the ManageIQ appliance using the internalappliance console.

Accessing the Appliance Console

Start the appliance and open a terminal console.

Enter the

appliance_consolecommand. The ManageIQ appliancesummary screen displays.Press

Enterto manually configure settings.Press the number for the item you want to change, and press

Enter.The options for your selection are displayed.Follow the prompts to make the changes.

Press

Enterto accept a setting where applicable.

Note:

The ManageIQ appliance console automatically logs outafter five minutes of inactivity.

Tmpdisk App Os X

Configuring a Database

ManageIQ uses a database to store information about theenvironment. Before using ManageIQ, configure the databaseoptions for it; ManageIQ provides the following twooptions for database configuration:

Install an internal PostgreSQL database to the appliance

Configure the appliance to use an external PostgreSQL database

Configuring an Internal Database

Start the appliance and open a terminal console.

Enter the

appliance_consolecommand. The ManageIQ appliancesummary screen displays.Press Enter to manually configure settings.

Select Configure Application from the menu.

You are prompted to create or fetch an encryption key.

If this is the first ManageIQ appliance, choose Createkey.

If this is not the first ManageIQ appliance, chooseFetch key from remote machine to fetch the key from thefirst appliance. For worker and multi-region setups, use thisoption to copy key from another appliance.

Note:

All ManageIQ appliances in a multi-regiondeployment must use the same key.

Choose Create Internal Database for the database location.

Choose a disk for the database. This can be either a disk youattached previously, or a partition on the current disk.

Red Hat recommends using a separate disk for the database.If there is an unpartitioned disk attached to the virtual machine,the dialog will show options similar to the following:

Enter 1 to choose

/dev/vdbfor the database location. Thisoption creates a logical volume using this device and mounts thevolume to the appliance in a location appropriate for storingthe database. The default location is/var/lib/pgsql, whichcan be found in the environment variable$APPLIANCE_PG_MOUNT_POINT.Enter 2 to continue without partitioning the disk. A secondprompt will confirm this choice. Selecting this option resultsin using the root filesystem for the data directory (not advisedin most cases).

Enter Y or N for Should this appliance run as a standalonedatabase server?

Select Y to configure the appliance as a database-onlyappliance. As a result, the appliance is configured as a basicPostgreSQL server, without a user interface.

Select N to configure the appliance with the fulladministrative user interface.

When prompted, enter a unique number to create a new region.

Creating a new region destroys any existing data on the chosendatabase.Create and confirm a password for the database.

ManageIQ then configures the internal database. This takes a fewminutes. After the database is created and initialized, you can log into ManageIQ.

Configuring an External Database

Based on your setup, you will choose to configure the appliance to usean external PostgreSQL database. For example, we can only have onedatabase in a single region. However, a region can be segmented intomultiple zones, such as database zone, user interface zone, andreporting zone, where each zone provides a specific function. Theappliances in these zones must be configured to use an externaldatabase.

The postgresql.conf file used with ManageIQ databases requiresspecific settings for correct operation. For example, it must correctlyreclaim table space, control session timeouts, and format the PostgreSQLserver log for improved system support. Due to these requirements, RedHat recommends that external ManageIQ databases use apostgresql.conf file based on the standard file used by theManageIQ appliance.

Ensure you configure the settings in the postgresql.conf to suit yoursystem. For example, customize the shared_buffers setting according tothe amount of real storage available in the external system hosting thePostgreSQL instance. In addition, depending on the aggregate number ofappliances expected to connect to the PostgreSQL instance, it may benecessary to alter the max_connections setting.

Note:

ManageIQ requires PostgreSQL version 9.5.

Because the

postgresql.conffile controls the operation of alldatabases managed by a single instance of PostgreSQL, do not mixManageIQ databases with other types of databases in a singlePostgreSQL instance.

Start the appliance and open a terminal console.

Enter the

appliance_consolecommand. The ManageIQ appliancesummary screen displays.Press Enter to manually configure settings.

Select Configure Application from the menu.

You are prompted to create or fetch a security key.

If this is the first ManageIQ appliance, choose Createkey.

If this is not the first ManageIQ appliance, chooseFetch key from remote machine to fetch the key from thefirst appliance.

Note:

All ManageIQ appliances in a multi-regiondeployment must use the same key.

Choose Create Region in External Database for the database location.

Enter the database hostname or IP address when prompted.

Enter the database name or leave blank for the default(

vmdb_production).Enter the database username or leave blank for the default (

root).Enter the chosen database user’s password.

Confirm the configuration if prompted.

ManageIQ will then configure the external database.

Configuring a Worker Appliance

You can use multiple appliances to facilitate horizontal scaling, aswell as for dividing up work by roles. Accordingly, configure anappliance to handle work for one or many roles, with workers within theappliance carrying out the duties for which they are configured. You canconfigure a worker appliance through the terminal. The following stepsdemonstrate how to join a worker appliance to an appliance that alreadyhas a region configured with a database.

Start the appliance and open a terminal console.

Enter the

appliance_consolecommand. The ManageIQ appliancesummary screen displays.Press Enter to manually configure settings.

Select Configure Application from the menu.

You are prompted to create or fetch a security key. Since this isnot the first ManageIQ appliance, choose 2) Fetch key fromremote machine. For worker and multi-region setups, use thisoption to copy the security key from another appliance.

Note:

All ManageIQ appliances in a multi-region deploymentmust use the same key.

Choose Join Region in External Database for the database location.

Enter the database hostname or IP address when prompted.

Enter the port number or leave blank for the default (

5432).Enter the database name or leave blank for the default(

vmdb_production).Enter the database username or leave blank for the default (

root).Enter the chosen database user’s password.

Confirm the configuration if prompted.

Additional Configuration for Appliances on VMware vSphere

Installing VMware VDDK on ManageIQ

To install VMware VDDK:

Download the required VDDK version(

VMware-vix-disklib-[version].x86_64.tar.gz) from the VMwarewebsite.Note:

If you do not already have a login ID to VMware, then you willneed to create one. At the time of this writing, the file can befound by navigating to menu:Downloads[vSphere]. Select theversion from the drop-down list, then click the Drivers &Tools tab. Expand Automation Tools and SDKs, and clickGo to Downloads next to the VMware vSphere Virtual DiskDevelopment Kit version. Alternatively, find the file bysearching for it using the Search on the VMware site.

See VMware documentation for information about their policyconcerning backward and forward compatibility for VDDK.

Download and copy the

VMware-vix-disklib-[version].x86_64.tar.gzfile to the/rootdirectory of the appliance.Start an SSH session into the appliance.

Extract and install the

VMware-vix-disklib-[version].x86_64.tar.gzfile using the following commands:Run

ldconfigto instruct ManageIQ to find the newlyinstalled VDDK library.Note:

Use the following command to verify the VDDK files are listed andaccessible to the appliance:

Restart the ManageIQ appliance.

The VDDK is now installed on the ManageIQ appliance. Thisenables use of the SmartState Analysis server role on the appliance.

Tuning Appliance Performance

By default, the ManageIQ appliance uses the tunedservice and its virtual-guest profile to optimize performance. In mostcases, this profile provides the best performance for the appliance.

However on some VMware setups (for example, with a large vCenterdatabase), the following additional tuning may further improve applianceperformance:

When using the

virtual-guestprofile intuned, edit thevm.swappinesssetting to1in thetuned.conffile from thedefault ofvm.swappiness = 30.Use the

noopscheduler instead. See the VMwaredocumentation for moredetails on the best scheduler for your environment. See Setting theDefault I/OSchedulerin the Red Hat Enterprise Linux Performance Tuning Guide forinstructions on changing the default I/O scheduler.

Logging In After Installing ManageIQ

Once ManageIQ is installed, you can log in and performadministration tasks.

Log in to ManageIQ for the first time after installing by:

Navigate to the URL for the login screen. (https://xx.xx.xx.xx onthe virtual machine instance)

Enter the default credentials (Username: admin | Password:smartvm) for the initial login.

Click Login.

Changing the Default Login Password

Change your password to ensure more private and secure access toManageIQ.

Navigate to the URL for the login screen. (https://xx.xx.xx.xx onthe virtual machine instance)

Click Update Password beneath the Username and Passwordtext fields.

Enter your current Username and Password in the text fields.

Input a new password in the New Password field.

Repeat your new password in the Verify Password field.

Click Login.

Currently, the appliance_console_cli feature is a subset of the full functionality of the appliance_console itself, and covers functions most likely to be scripted by using the command-line interface (CLI).

After starting the ManageIQ appliance, log in with a user name of

rootand the default password ofsmartvm. This displays the Bash prompt for the root user.Enter the

appliance_console_cliorappliance_console_cli --helpcommand to see a list of options available with the command, or simply enterappliance_console_cli --option <argument>directly to use a specific option.

Database Configuration Options

| Option | Description |

| –region (-r) | region number (create a new region in the database - requires database credentials passed) |

| –internal (-i) | internal database (create a database on the current appliance) |

| –dbdisk | database disk device path (for configuring an internal database) |

| –hostname (-h) | database hostname |

| –port | database port (defaults to 5432) |

| –username (-U) | database username (defaults to root) |

| –password (-p) | database password |

| –dbname (-d) | database name (defaults to vmdb_production) |

v2_key Options

| Option | Description |

| –key (-k) | create a new v2_key |

| –fetch-key (-K) | fetch the v2_key from the given host |

| –force-key (-f) | create or fetch the key even if one exists |

| –sshlogin | ssh username for fetching the v2_key (defaults to root) |

| –sshpassword | ssh password for fetching the v2_key |

IPA Server Options

| Option | Description |

| –host (-H) | set the appliance hostname to the given name |

| –ipaserver (-e) | IPA server FQDN |

| –ipaprincipal (-n) | IPA server principal (default: admin) |

| –ipapassword (-w) | IPA server password |

| –ipadomain (-o) | IPA server domain (optional). Will be based on the appliance domain name if not specified. |

| –iparealm (-l) | IPA server realm (optional). Will be based on the domain name of the ipaserver if not specified. |

| –uninstall-ipa (-u) | uninstall IPA client |

Note:

In order to configure authentication through an IPA server, in addition to using Configure External Authentication (httpd) in the

appliance_console, external authentication can be optionally configured via theappliance_console_cli(command-line interface).Specifying –host will update the hostname of the appliance. If this step was already performed via the

appliance_consoleand the necessary updates that are made to/etc/hostsif DNS is not properly configured, the –host option can be omitted.

Certificate Options

| Option | Description |

| –ca (-c) | CA name used for certmonger (default: ipa) |

| –postgres-client-cert (-g) | install certs for postgres client |

| –postgres-server-cert | install certs for postgres server |

| –http-cert | install certs for http server (to create certs/httpd* values for a unique key) |

| –extauth-opts (-x) | external authentication options |

Note: The certificate options augment the functionality of the certmonger tool and enable creating a certificate signing request (CSR), and specifying certmonger the directories to store the keys.

Other Options

| Option | Description |

| –logdisk (-l) | log disk path |

| –tmpdisk | initialize the given device for temp storage (volume mounted at /var/www/miq_tmp) |

| –verbose (-v) | print more debugging info |

Example Usage.

To create a new database locally on the server by using /dev/sdb:

To copy the v2_key from a host some.example.com to local machine:

You could combine the two to join a region where db.example.com is the appliance hosting the database:

To configure external authentication:

To uninstall external authentication:

Recovering an entire OSD node

A Ceph Recovery Story

I wanted to share with everyone a situation that happened to me over the weekend. This is a tale of a disaster, shear panic, and recovery of said disaster. I know some may just want a quick answer to how “X” happened to me and how I did “Y” to solve it. But I truly believe that if you want to be a better technician and all around admin, you need to know the methodology of how the solution was found. Let get into what happened, why it happened, how I fixed it, and how to prevent this from happening again.

What happened

It started with the simple need to upgrade a few packages on each of my cluster nodes (non Ceph related). Standard operating procedures dictate that this needs to be done on a not so traffic heavy part of the week. My cluster setup is small, consisting of 4 OSD nodes, an MDS and 3 monitors. Only wanting to upgrade standard packages on the machine, I decided to start with my first OSD node (lets just call it node1). I upgraded the packages fine, set the cluster to ceph old set noout, and proceeded to commence a reboot.

Xtreme mac owners manual. …Unbeknownst to me, the No.2 train from Crap city was about to pull into Pants station.

Reboot finishes, Centos starts to load up and then I go to try and login. Nothing. Maybe I typed the password wrong. Nothing. Caps lock? Nope. Long story short, changing my password in single user mode hosed my entire os. Thats fine though I can rebuild this node and re-add it back in. Lets mark these disk out one at a time, left the cluster heal, and add in a new node (after reinstalling the os).

After removing most of the disks, I notice I have 3 pgs stuck in a stale+active+clean state. What?!

The pool in this case is pool 11 (pg 11.48 – 11 here tells you what pool its from). Checking on the status of pool 11, I find it is inaccessible and it has caused an outage.

…and the train has arrived.

But why did this happen?

Panic sets in a bit, and I mentally start going through the 5 “whys” of troubleshooting.

- Problem – My entire node is dead and lost access to its disks and one of my pools is completely unavailable

- Why? And upgraded hosed the OS

- Why? Pool outage possibly was related to the OSD disks

- Why? Pool outage was PG related

What did all 3 of those PGs have in common? Their PG OSD disks all resided on the same node. But how? I have all my pools in CRUSH to replicate between hosts.

Note: You can see the OSDs that make up a PG at the end of the detail status for it ‘last acting [73,64,60]’

Holy crap, it never got set to the “hosts” crush rule 1.

How do we fix this

Lets update our whys.

- Problem – My entire node is dead and lost access to its disks and one of my pools is completely unavailable

- Why? And upgraded hosed the OS

- Why? Pool outage possibly was related to the OSD disks

- Why? Pool outage was PG related

- Why? Pool fail because 3 of its PG solely resided on a failed node. These disks were also marked out and removed from the cluster.

There you have it. We need to get the data back from these disks. Now a quick google of how to fix these PGs will quickly lead you to the most common answer, delete the pool and start over. Well thats great for people who don’t have anything in their pool. Well looks like I’m on my own here.

First thing I want to do is get this node installed back with an OS on it. Once thats complete I want to install ceph and deploy some admin keys to it. Now the tricky part, how to I get these disks to be added back in to the cluster and retain all their data and OSD IDs?

Well we need to map the effected OSDs back to their respective directories. Since this OS is fresh, lets recreate those directories. I need to recover data from osd 60,64,68,70,73,75,77 so we’ll make only these.

Thankfully I knew where each /dev/sd$ device gets mapped. If you don’t remember thats ok, each disk has a unique ID that can be found on the actual disk it self. Using that we can cross reference it with out actually cluster info!

Boom there we go, this disk belonged to osd.60, lets mount that to the appropriate cep directory /var/lib/ceph/osd/ceph-60

Ok so maybe you did like me and REMOVED the osd entirely from the cluster, how do I find out its OSD number then, well easier than you think. All you do is take that FSID number and use it to create a new OSD. Once complete it will return and create it with its original OSD id!

Ok so now what, well lets start one of our disks and see what happens (set it to debug 10 just in case)

Well it failed with this error

Well googling this resulted in nothing. So run it through the 5 whys and see if we can’t figure it out.

- Problem – OSD failed to obtain rotating keys. This sounds like a heartbeat/auth problem.

- Why? Other nodes may have issues seeing this one on the network. Nope this checks fine.

- Why? Heartbeat could be messed up, time off?

- Why? Time was off by 1 whole hour, this solved the issue.

Tmpdisk

Yay! Having a bad time set will make your keys (or tickets) look like they have expired during this handshake. Trying to start this osd again resulted in a journal error, not a big deal lets just recreate the journal.

Lets try one more time…

Success! How do I know? Because systemctl status ceph-osd@60 reports success and running ceph -s shows it as up and in.

Note: When adding these disks back to the crush map, set their weight to 0, so that nothing gets moved to them, but you can read what data you need off of them.

I repeated this will all the other needed OSDs, and after all the rebuilding and backfilling was done. I check the pool again and saw all my images! Thank god. I changed the pool to replicate at the host level by changing to the new crush rule.

I waited for it to rebalance the pool and my cluster status was healthy again! At this point I didn’t want to keep up just 8 OSDs on one node, so one by one I set them out and properly removed them from the cluster. Ill rejoin this node later once my cluster status goes back to being healthy.

TL:DR

Tmp Disk Osx

I had a PG that was entirely on a failed node. By reinstalling the OS again on that node, I was able to manually mount up the old OSDs and add them to the cluster. This allowed me to regain access to vital data pools and fix the crush rule set that caused this to begin with.

Thank you for taking the time to read this. I know its lengthy but I wanted to detail to the best of my ability everything I encountered and went through. If it have any questions or just wanna say thanks, please feel free to send me an email at magusnebula@gmail.com.

Tmpdisk Slurm

Source: Stephen McElroy (Recovering from a complete node failure)